What Systems and Processes Do Donors Use for Measuring Aid Effectiveness?

Donors increasingly issue guidelines for monitoring and evaluation and provide technical support to aid partners and grantees. These reporting requirements are intended to facilitate aggregating and summarizing results information across countries, grantees, and programs. Even with consistently applied measurement and reporting standards, however, donors may still face challenges in measuring and evaluating their overall or collective results and effectiveness.

This is the second in a series of four posts presenting evidence and trends from the Evans School Policy Analysis and Research (EPAR) Group’s report on donor-level results measurement systems. The first post in the series provides an introduction to donor-level results measurement systems and outlines the theoretical framework, data, and methods for the research.

This post focuses on the systems and processes donors use for measuring aid effectiveness. EPAR reviewed evidence from 22 government results measurement systems, including 12 bilateral organizations and 10 multilateral organizations. Part one of this post considers how donors organize their results measurement systems, and part two examines the levels at which donors measure results and evaluate performance.

How Donors Organize Results Measurement

Results measurement at the donor level is based on data collected from the point of program implementation and aggregated up. Therefore, donor organizations often provide expectations and guidance to grantees and partners on their preferred approaches to measuring and evaluating results. Half of donors reviewed (11 of 22) provide grantees with guidelines or standards for data collection or aggregation. For example, the African Development Bank ‘Approach Paper’ sets out expectations for, among other issues, data collection and evaluation methods, program activities, and resources requirements. Four other donors provide only some guidance or plan to provide guidance but have not yet done so.

Ten of 22 donors also provide oversight to grantees in assuring and verifying data quality. Methods used to verify data include independent internal committees for data review and grantee-based mechanisms, such as self-assessment or peer assessment of validity. One exception, Danida, uses external evaluators to verify their data. In some cases, donors provide technical support to improve data quality, while in other cases grantees receive general guidance on verifying data, but it is unclear how much this guidance is used.

Though grantee results measurement and reporting is important to donors’ ability to assess overall performance, fewer than half of donors (nine of 22) provide consistent standards or guidelines for grantee reporting. Lack of standardized reporting requirements limits donors’ ability to aggregate results information across grantees and projects. Regular detailed reporting by grantees, such as reporting expenditures, budgets, targets, outcomes, and lessons learned, does not necessarily mitigate the issue of inconsistent reporting standards. For example, an OECD review of results-based management notes that although many donors required detailed annual reporting, variability existed in the reports’ quality.

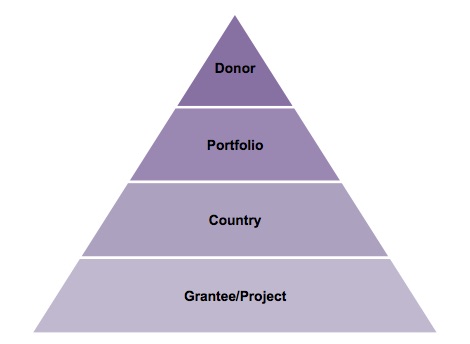

Depending on the coordination and alignment of results measurement, donors may additionally choose to aggregate results at (Figure 2): the grantee/project level (individual programs), the recipient-country level (collections of all programs within a country), and the portfolio level (collections of particular programs within and across countries). The decision to report findings at different levels depends on the expected uses of results information for each organization, and on the information demands of their stakeholders.

Project-Level Results Measurement

Donors regularly receive reports on activities and results of the projects they fund, and most of the donors we reviewed (15 of 22) describe specific processes to monitor and evaluate results at the project level. These processes include conducting project evaluations to assess results, providing oversight through a contact person, and establishing an evaluation committee to validate reports. For example, the World Food Programme has an Evaluation Policy document which describes standards for project evaluations.

Inadequate data collection systems are a frequent challenge for collecting and reviewing project-level results. Multiple donors (9 out of 22) report challenges including developing effective standards and guidance for staff, making clear connections between project goals and strategies and results to be measured, and missing baseline or inappropriately disaggregated data. These challenges make it more difficult for organizations to aggregate results above the project level.

Recommendations for improving project-level results measurements vary and are largely tied to individual organizations’ specific challenges, for example developing a “culture of evaluation” and improving methods to better evaluate more challenging interventions (Agence Française de Développement). Many of the recommendations mentioned in donor internal or peer reviews target managing project-level monitoring systems better in order to ensure collection of relevant results data. Thirteen out of 22 donors reviewed require their grantees to develop a theory of change, logic model, results chain, or results framework. These tools are designed to identify the key indicators and outcomes to measure and to ensure they are included in grantees’ monitoring and evaluation plans.

Country-Level and Portfolio-Level Results Measurement

In addition to measuring project-level results, donor organizations may also have processes for measuring results at the country- or portfolio-level, across multiple programs. Fifteen out of the 22 donors reviewed report having processes for country-level results measurement. In many cases, donors aggregate and summarize country-level results information in their annual reports. Some donors, however, have specific evaluation programs for the country level. For instance, Canada’s Department of Foreign Affairs, Trade and Development reviews all countries within each five year rolling evaluation program.

Ten donors mention challenges with measuring results at the country level. The most common challenges include poor data quality and unavailable baseline data. Some organizations (e.g., African Development Bank, Asian Development Bank, Dutch Ministry of Foreign Affairs) describe the importance of a clear results framework in collecting and reviewing results at the country level and aligning time-trend and other indicators across programs.

Reporting of results at the portfolio level (i.e., aggregating across multiple programs in specific thematic areas) is also common in many organizations’ annual reports. Eighteen of 22 donors collect and review some results at the portfolio level. For example, the Norwegian Agency for Development Cooperation (Norad)’s 2012 annual report describes portfolio evaluations for agriculture and food security, climate and forest, East Africa, people with disabilities, and oil for development.

Eight donors report challenges with or recommendations for measuring portfolio-level results. Challenges described in the documents reviewed are similar to those at the country level: issues of inadequate baseline measures, poor alignment of indicators, lack of results frameworks, and insufficient harmonization of strategies and goals. Donor recommendations for improvement at this level include providing more guidance for collecting and aggregating data (USAID) and developing coherent theories of change to inform data collection (Norad).

Donor-Level Results Measurement

Results information from the project, country, and portfolio levels is useful for managing and improving programs, projects, and policy, and also for institutional accountability and strategic management. Results at these levels are less useful, however, for high-level political accountability and results reporting. For this, organizations aggregate and assess results at the overall donor level.

Eighteen of 22 donors describe processes to review and evaluate performance aggregated across all projects, countries, and portfolios. An additional three organizations describe plans to institute a process for aggregating results at the donor level. Results at the organizational level are usually presented in annual reports. For example, [IMF Annual Reports](http://www.ieo-imf.org/ieo/files/annualreports/AR_2014_IEO_full report.pdf) summarize performance in a variety of areas, including surveillance, financial support, capacity development, risk management, internal governance, staffing, and transparency. The types of results reported in donor annual reports varies widely, and will be the subject of the next post in this series.

Over half of the donors (12) mention challenges in collecting, reviewing, and aggregating results at the donor level. Many challenges are similar to those in other levels of results measurement, but organizations report specific donor-level challenges including lack of baseline and financial data, attributing results to organization activities, and assessing longer term results. Another set of challenges relates to ability to aggregate data, with difficulties communicating across agencies and excessive flexibility over grantee monitoring and reporting. Recommendations discussed by the donors we reviewed include providing more guidance for grantee reporting (Norad), clarifying policies for validating data (Asian Development Bank), using a results framework connecting strategies with desired results ([Agence Française de Développement](http://www.oecd.org/dac/peer-reviews/OECD France_ENGLISH version onlineFINAL.pdf)), and implementing real-time monitoring systems (Global Fund).

Donor-Level Performance Evaluation

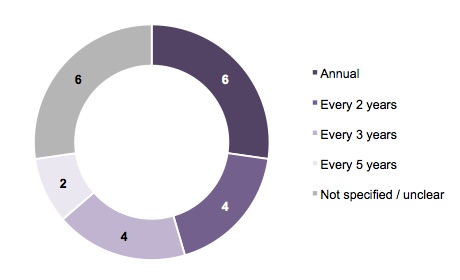

In addition to aggregating results to the donor level, some donors also seek to evaluate these results. Of the 22 donor organizations reviewed, 18 conduct periodic evaluations of their own overall aid performance, which aim to assess performance across all organizational programs (Figure 2). Two-thirds of donors (14) conduct these evaluations internally, meaning that the donors themselves carry out the organization-wide evaluation. The remaining eight donors do not assign evaluation responsibilities to internal groups, but rather outsource evaluation activities to independent external reviewers.

Common challenges for donors in managing organizational evaluation relate to lack of financial resources, staff capacity, and internal coordination. Some donors describe inadequate technical support ([Vivideconomics’ review of donor M&E frameworks](http://www.afdb.org/fileadmin/uploads/afdb/Documents/Generic-Documents/Monitoring and evaluation frameworks and the performance and governance of international funds.pdf)), while others note that staff do not put all of their training into practice due to time and resource limitations (Norad). Incentives also constrain the scope and relevance of evaluations, as these are sometimes limited to interventions that are “easier” to evaluate or more likely to produce positive results (World Bank).

Results from individual project and portfolio evaluations contribute to organization-wide evaluations, meaning that following recommended practices for overcoming challenges in lower-level evaluations can also improve donor-level evaluations. Donor recommendations for addressing these challenges include budgeting for and planning evaluation time into program management decisions (Australia’s Department of Foreign Affairs and Trade), offering comprehensive training for program staff (Norad), providing more clarity on evaluation metrics (Norad), and having an evaluation specialist on each evaluation team (USAID).

EPAR finds that a majority of donors provide grantees with support and guidance on monitoring and evaluating results, have processes for aggregating results to the donor level, and conduct organization-wide evaluations every one to three years. Many donors also measure and report results at more than one level, usually the donor level and one lower level such as the project level (14 of 22) or the country level (8 of 22). There is wide scope for donors to improve their results measurement efforts, particularly by overcoming common challenges such as lack of access to monitoring and evaluation expertise, inadequate funding and staff capacity, poor data quality, and lack of alignment of indicators and reports. The next post in this series examines the types of donor-level results measures used to evaluate aid funding.

References to evidence linked in this post are included in EPAR’s full report.

Share This Post

Related from our library

Harnessing the Power of Data: Tackling Tobacco Industry Influence in Africa

Reliable, accessible data is essential for effective tobacco control, enabling policymakers to implement stronger, evidence-based responses to evolving industry tactics and public health challenges. This blog explores how Tobacco Industry strategies hinder effective Tobacco control in Africa, and highlights how stakeholders are harnessing TCDI Data to counter industry interference.

Building a Sustainable Cashew Sector in West Africa Through Data and Collaboration

Cashew-IN project came to an end in August 2024 after four years of working with government agencies, producers, traders, processors, and development partners in the five implementing countries to co-create an online tool aimed to inform, support, promote, and strengthen Africa’s cashew industry. This blog outlines some of the key project highlights, including some of the challenges we faced, lessons learned, success stories, and identified opportunities for a more competitive cashew sector in West Africa.

Digital Transformation for Public Value: Development Gateway’s Insights from Agriculture & Open Contracting

In today’s fast-evolving world, governments and public organizations are under more pressure than ever before to deliver efficient, transparent services that align with public expectations. In this blog, we delve into the key concepts behind digital transformation and how it can enhance public value by promoting transparency, informing policy, and supporting evidence-based decision-making.