How Do Donors Use the Results Data They Collect?

Donors use aid results information for several purposes, from ensuring accountability to external stakeholders to improving both organizational operations and program-level outcomes. A recent study, however, argues that donors’ aggregate results measures are not sufficient for securing donor accountability or for evaluating aid effectiveness, and highlights several challenges and potential adverse effects of measuring aggregate organization-level results.

This is the final post in a series of four presenting evidence and trends from the Evans School Policy Analysis and Research (EPAR) Group’s report on donor-level results measurement systems. The first post in the series provides an introduction to donor-level results measurement systems and outlines the theoretical framework, data, and methods for the research. The second post examines the systems and processes donors use for measuring aid effectiveness, examines the systems and processes donors use for measuring aid effectiveness, and the third post explores the types of results measures donors use to evaluate their performance.

This post focuses on donors’ processes for reporting and sharing results information and for using this information to inform organizational learning, strategy, and planning. Our report summarizes the evidence from reviews of 22 government results measurement systems, including 12 bilateral organizations and 10 multilateral organizations. Part one considers donors’ systems for sharing results information, and part two examines processes for incorporating these results.

Systems for Sharing Results Information

Communicating results information is a vital part of any results-based monitoring and evaluation system. Information sharing enables learning and, ultimately, improves organizational and program performance. Dissemination of information can occur internally (i.e., within the organization) and externally (i.e., among grantees, partners, and the public). EPAR finds that 14 of the 22 donors reviewed have publicly-available rules for reporting donor-level results, but the sharing of results internally and externally is not always explicit. Results are most commonly reported through performance and annual reports, but in some cases through corporate results frameworks, strategic evaluations, and/or organization-wide scorecards.

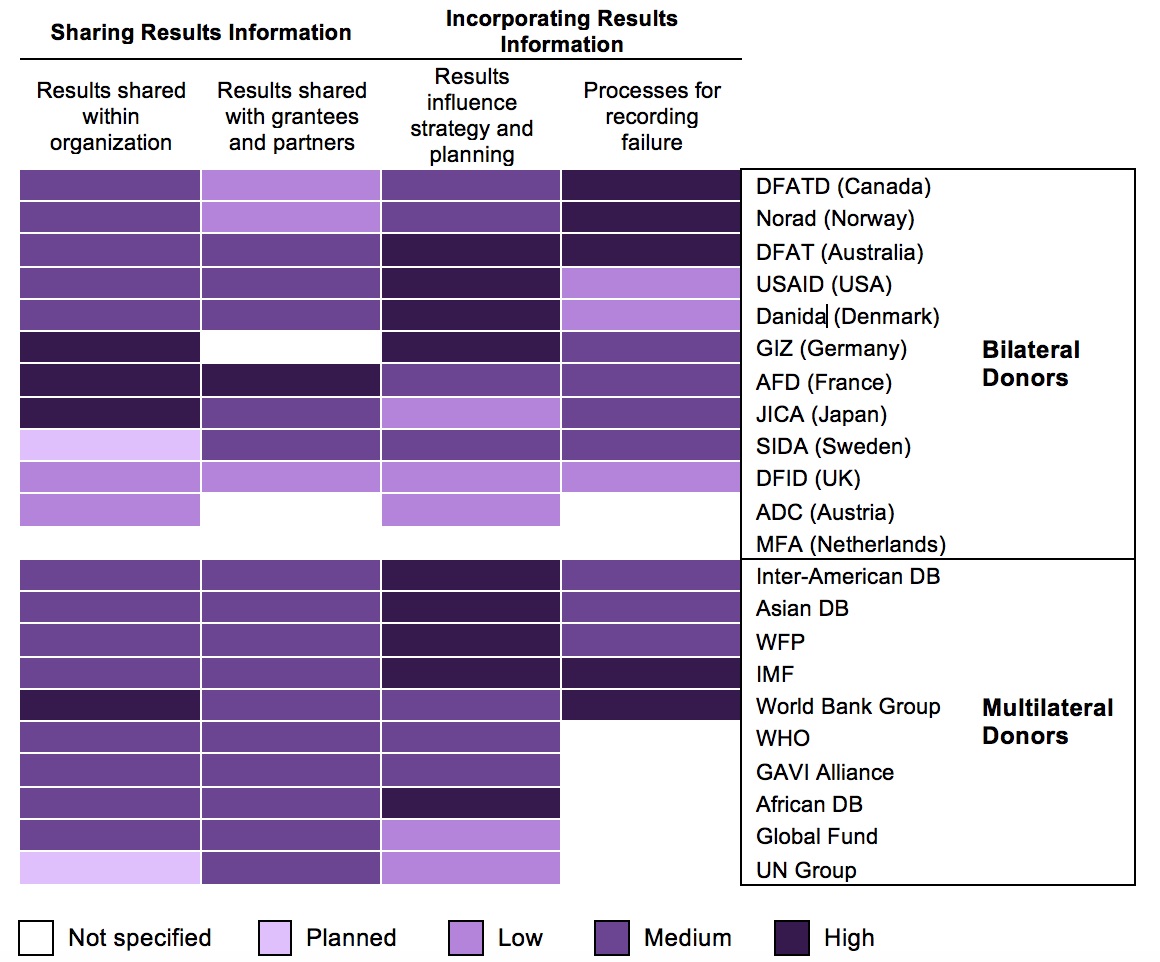

To assess donors’ attention to sharing and incorporating results information, EPAR rates donors across four dimensions (Figure 1). The first two dimensions examine whether results are shared within the organization (column 1) and with grantees and partners (column 2). The third and fourth dimensions consider donors’ systems for using results information to influence strategy and planning (column 3) and whether processes exist for recording failure (column 4).

Figure 1: Systems for Sharing and Incorporating Results Information

EPAR rates three bilateral organizations and one multilateral organization as having “high” levels of internal results sharing (Figure 1, column 1), meaning these donors demonstrate evidence of consistently and systematically sharing results information. For example, the World Bank Group embeds corporate scorecard indicators in Managing Directors’ performance Memoranda of Understanding. EPAR further categorizes 12 (five bilateral and seven multilateral) organizations as having a “medium” level of attention to internal sharing of results. These organizations may not explicitly share reports among departments but these reports are posted publicly on a website and they may also be disseminated internally. Two (both bilateral) organizations are rated “low” as they describe challenges to sharing results information internally, such as a lack of internal communication about lessons learned from successful projects both among institutions and across thematic and recipient-country groups within the organization.

EPAR further rates donors on their attention to sharing results information with grantees and implementing partners (Figure 1, column 2). AFD (France) is rated as “high” because they have processes for systematically sharing results information with and receiving feedback from external stakeholders. We classify 15 out of 22 organizations as having a “medium” level of attention to sharing information with implementing partners and grantees. Three organizations receive a “low” classification due to demonstrated challenges in successfully communicating with partners or implementers. Peer reviews of Norad (Norway) and MFA (Netherlands) provide some suggestions for improving results sharing, from publishing recommendations and using seminars, workshops, and conferences to disseminate results to producing short, informative executive summaries and non-technical briefs.

Systems for Incorporating Results Information

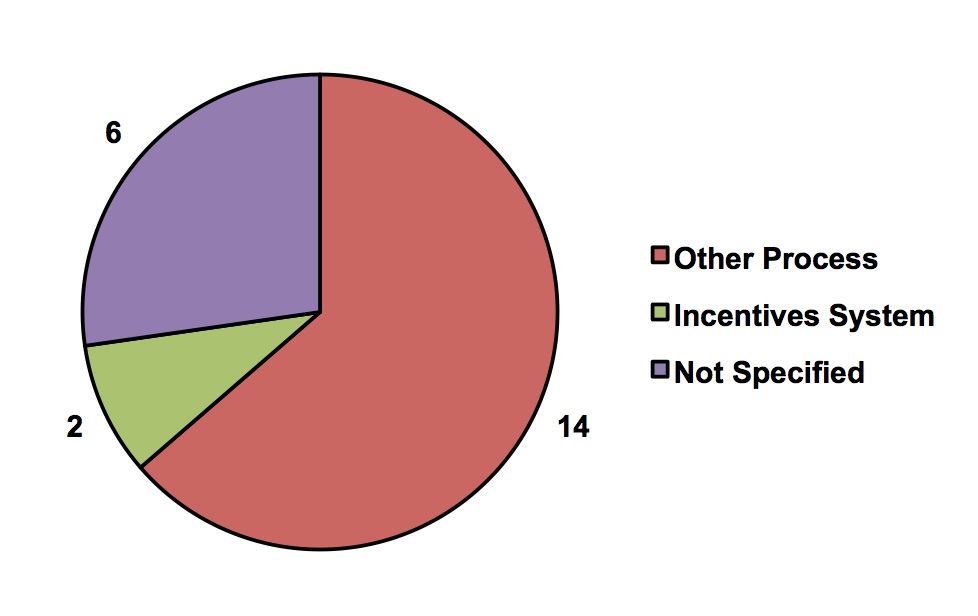

Figure 2: Types of Systems for Incorporating Results Information

The ultimate goal of most results-based monitoring and evaluation systems is to both continuously share results information and integrate these results to improve organizational and program performance. EPAR finds that 16 of 22 donor organizations describe regularly incorporating results information into their activities (Figure 2). Only the World Bank Group and DFATD (Canada), however, explicitly indicate the use of a formal set of measures designed to positively and/or negatively motivate employees (i.e., incentive systems) to incorporate results. The other 14 donors with established processes for incorporating results information describe a variety of processes, such as using knowledge management documents and establishing policies for organizational learning. Six organizations do not specify whether they have a system for incorporating results information or do not consistently follow prescribed processes.

Among these different types of systems for incorporating results information, donors vary in the amount of attention they give to using results for planning, strategy and project implementation (Figure 1, column 3). We rate nine of 22 organizations as “high”, meaning that they have systems to ensure that they consistently incorporate results information into organizational planning and implementation. For instance, a 2014 Peer Review of Danida (Denmark) concludes that Danida’s organizational culture is strongly results-oriented and both management and staff are committed to using results information. A further seven organizations receive a “medium” rating as they have organizational documents outlining the importance of using results information in planning, but there is not a clear process by which this is done. Five organizations receive a “low” rating, indicating challenges with using results information or failure to prioritize learning from the results of evaluations.

Results information is not always positive, and organizations may have insufficient incentives for reporting negative results and failures. EPAR rates five of 22 donors as giving “high” attention to processes for recording failures, showing evidence of explicit cases and specific processes for reporting and incorporating lessons from failures. Seven organizations are rated “medium”, demonstrating some evidence of reporting failures, but no evidence for a specific process for learning. Three donors are rated “low”, with evidence of barriers to reporting failure in the organization. A meta-evaluation of quality and coverage of USAID evaluations, for example, reports that unplanned results or alternative causes of outcomes are not commonly recorded due to inadequate expertise on the evaluation teams.

Challenges in Using Results Information

Donors describe three common challenges to using results information. First, as described above, some organizations do not provide sufficient incentives to disseminate and learn from negative results and failures. Norad reports that lacking an incentives system or sanctions hinders the use of results information and recommends that the Norwegian Ministry of Foreign Affairs publish examples of results information used well to incentivize greater use. Second, the Asian Development Bank, Danida, Norad, USAID, and the World Food Program report that management or other decision-makers do not use recommendations or lessons learned from evaluations because evaluation reports are too long, difficult to read, or contain recommendations that are not specific enough to act on. A review of the African Development Bank reports that results information is not used because of a long lag time (up to three years) between the completion of the project and the availability of results information. Finally, Danida, DFAT (Australia), DFID (UK), and Norad report a weak culture for using results information, which may contribute to inconsistent instructions from management on how to describe the performance of programs.

EPAR finds that almost all donor organizations have processes for sharing results both within their organization and with grantees and development partners. For most donors, however, these processes neither involve explicit information sharing nor extend beyond publicly publishing results information. Similarly, a majority of donors have organizational documents emphasizing the importance of using results information in planning and for reporting negative results, but few donors incorporate results information systematically and consistently. There is wide scope for donors to improve their results measurement efforts, particularly by providing incentives for using results in decision-making; disseminating evaluation reports with specific, concise, and understandable recommendations; and fostering a results-oriented organizational culture that values organizational learning.

References to evidence linked in this post are included in EPAR’s full report.

Share This Post

Related from our library

Harnessing the Power of Data: Tackling Tobacco Industry Influence in Africa

Reliable, accessible data is essential for effective tobacco control, enabling policymakers to implement stronger, evidence-based responses to evolving industry tactics and public health challenges. This blog explores how Tobacco Industry strategies hinder effective Tobacco control in Africa, and highlights how stakeholders are harnessing TCDI Data to counter industry interference.

Building a Sustainable Cashew Sector in West Africa Through Data and Collaboration

Cashew-IN project came to an end in August 2024 after four years of working with government agencies, producers, traders, processors, and development partners in the five implementing countries to co-create an online tool aimed to inform, support, promote, and strengthen Africa’s cashew industry. This blog outlines some of the key project highlights, including some of the challenges we faced, lessons learned, success stories, and identified opportunities for a more competitive cashew sector in West Africa.

Digital Transformation for Public Value: Development Gateway’s Insights from Agriculture & Open Contracting

In today’s fast-evolving world, governments and public organizations are under more pressure than ever before to deliver efficient, transparent services that align with public expectations. In this blog, we delve into the key concepts behind digital transformation and how it can enhance public value by promoting transparency, informing policy, and supporting evidence-based decision-making.