Measuring digital transformation? Get real.

This piece was originally published by The Data Values Digest. The original post can be found here.

How digitally developed is your organization? If you don’t know, you’re not alone: Despite what many indices, scorecards, and self-assessments would lead you to believe, there’s no single measure of digital transformation—defined as adopting digital technologies to improve efficiency, value, or innovation. As countries embark on digital transformation at a national scale, they need to monitor their progress, evaluate the impact of initiatives, learn from and adapt approaches, and—perhaps most importantly—demonstrate returns on investments. However, even in the private sector, fewer than 15 percent of companies can quantify the return on investment of their digital initiatives.

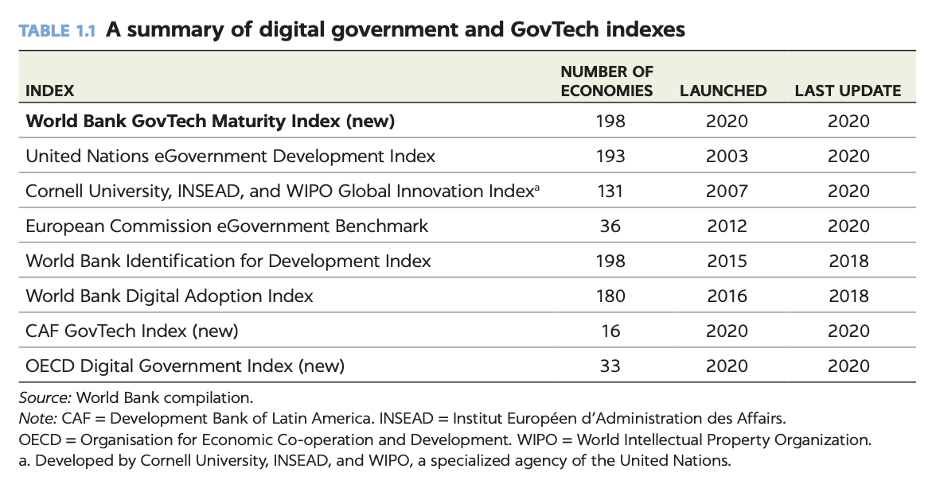

Still, multiple digital transformation indices and self-assessments aim to help countries evaluate their progress and make a case for digital investments (see a sample of these in the chart below). They also, in effect, propose theories for how countries should achieve digital transformation. The problem is that there is no “digital utopia.” For an agenda that is crucial to global development, no single measure of digital transformation presents an objective picture of progress in this area. Understanding the limits of existing measures is key to effectively measuring and targeting investments in digital transformation.

No one-size-fits-all approach

Countries, like companies, have varying baseline capacities for going digital. But many digital transformation measurement frameworks still evaluate countries in terms of rankings or scores compared to other countries. These frameworks assume that a specific recipe of policies, human capacity, physical infrastructure, and data standards will result in successful digital adoption without much evidence to support this theory or even clear examples of what a “successful” digital transformation looks like.

While some evidence suggests that a top-down, whole-of-government approach to digital transformation is ideal, few digital development initiatives are implemented at this scale. To better understand the impacts of digital transformation initiatives, users of these frameworks should be realistic about the aspect of digital transformation that an initiative is targeting and only use metrics that relate to that aspect.

Oversimplifying challenges, especially at the “last mile”

Good intentions to introduce digital technology and data often create and reinforce power imbalances, especially at the last mile. Existing measurement frameworks focus on digital for digital’s sake without a clear connection to improvements in people’s lives such as: Do e-government initiatives result in improved service delivery, even at the “last mile”? How valuable are 5G or e-government services to rural villages with unreliable access to electricity and low levels of digital literacy? How many citizens prefer using digital platforms? Do people trust their government not to abuse digital technologies for surveillance or use their data for nefarious purposes?

Countries and development agencies are becoming increasingly aware of the “last mile” challenges of digital adoption, such as connectivity, literacy, affordability, relevancy, and trust. To better measure the success (or failure) of digital initiatives, measurement frameworks should not oversimplify or understate their impact on people’s daily lives. Some additional, more useful measures of success might include:

- Levels of trust in data and digital technologies,

- Government transparency on uses of data generated from digital services,

- Levels of participation and engagement in data governance agreements, and

- People’s rates of satisfaction with digital tools and services.

Downplaying the impact of bottom-up and locally-led initiatives

Existing digital transformation measures are framed around a top-down, whole-of-government approach, but this isn’t how governments or development initiatives work in practice. Even though the 2020 UN E-Government Survey underscored “the need to conduct separate assessments at the national and local levels,” metrics on digital transformation are typically available only at aggregate, country levels; key disaggregated and subnational data are not available in most countries. For example, data on digital adoption and use is critical to addressing the digital divide between men and women, urban and rural areas, high and low income households, and other groups. Yet, this level of data granularity is rarely made public (if it exists at all).

Local-level assessments of digitalization are rare, and little guidance or evidence exists on how subnational and national digitalization efforts should relate to one another for data and systems interoperability. Small-scale digital transformations at the county, state, or regional levels should not be discounted. This is the level at which digital initiatives are typically implemented and arguably where they have the most potential to impact communities directly.

Digital transformation is still worth measuring.

It’s tempting to simply throw our hands up and say that digital transformation is too complicated and diverse to measure simplistically. But there are compelling reasons to find better ways to measure country’s progress. Such information equips governments and development agencies to make the case for investment and measure impact. It can also help to identify and solve “last mile” challenges.

So how can we do better? When trying to assess the value and impact of digital transformation, we can:

- Be specific and realistic about which problems can be addressed with digital technologies;

- Seek to understand how the presence or absence of a digital tool impacts peoples’ daily lives; and

- Consider the value of alternative approaches to digital transformation, such as those that are locally-led or sector-specific.

As long as transformation measurement frameworks fail to address the realities of “going digital,” understanding returns on investment will continue to evade decision-makers amidst persistent inequalities.

Share

Recent Posts

Development Gateway Collaborates with 50×2030 Initiative on Data Use in Agriculture

Development Gateway announces the launch of the Data Interoperability and Governance program to collaborate with the 50x2030 initiative on data use in agriculture in Senegal for evidence-based policymaking.

From Data to Impact: Why Data Visualization Matters in Agriculture

This blog explores why data alone isn’t enough; what matters is turning it into usable insights. In agriculture, where decisions have lasting impacts, user-friendly tools help farmers and policymakers alike make better choices.

Harnessing the Power of Data: Tackling Tobacco Industry Influence in Africa

Reliable, accessible data is essential for effective tobacco control, enabling policymakers to implement stronger, evidence-based responses to evolving industry tactics and public health challenges. This blog explores how Tobacco Industry strategies hinder effective Tobacco control in Africa, and highlights how stakeholders are harnessing TCDI Data to counter industry interference.